Object Detection

Detect objects in video files, video streams or images and allows us to trigger actions with the detected data like name, rectangle and the detection score. When detecting objects, we can ask for subsequent frames. For instance, if we detect a person, we can configure the activity to send 2 other snapshots every 2 seconds after the person was detected. In this way, we can build a surveillance system that takes a snapshot at the detection time and 2 other snapshots within 6 seconds with 3 seconds between them and send an email with the result.

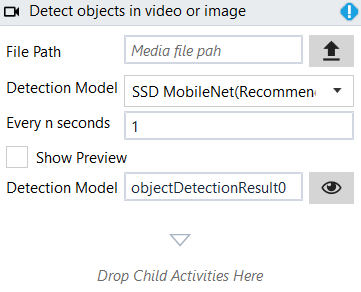

Designer Properties#

- File Path The path to the file to be used for detection. It can be a video file like mp4, avi, rtsp stream or a media file.

- Detection Model The model used for detection. At the moment, there are 5 models supported and sorted by the required CPU to run them. The first 2 are the recommended because they can run on any CPU and accuracy is acceptable. For higher accuracy, we can try the others as well. The supported objects are: "person, bicycle, car, motorcycle, airplane, bus, train, truck, boat, traffic light, fire hydrant, stop sign, parking meter, bench, bird, cat, dog, horse, sheep, cow, elephant, bear, zebra, giraffe, backpack, umbrella, handbag, tie, suitcase, frisbee, skis, snowboard, sports ball, kite, baseball bat, baseball glove, skateboard, surfboard, tennis racket, bottle, wine glass, cup, fork, knife, spoon, bowl, banana, apple, sandwich, orange, broccoli, carrot, hot dog, pizza, donut, cake, chair, couch, potted plant, bed, dining table, toilet, tv, laptop, mouse, remote, keyboard, cell phone, microwave, oven, toaster, sink, refrigerator, book, clock, vase, scissors, teddy bear, hair drier, toothbrush"

- Every n seconds Capture frames every n seconds. Check frames every n seconds and ignore all other frames in between.

- Show Preview Shows a preview, for videos, when running the workflow in Rinkt Studio.

- Detection Result The result of one detection represented as a VisionDetectionResults. The detection result may be for multiple objects on the same frame, and it contains a snapshot of the frame, the detected objects and the subsequent images, if any.

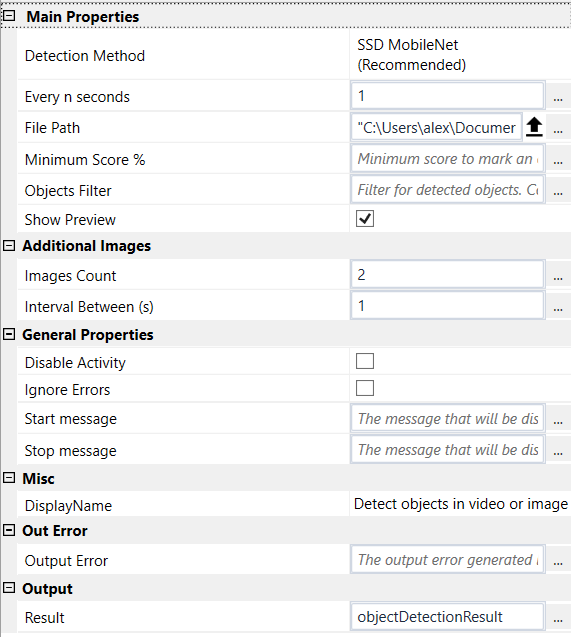

Properties#

Azure Properties#

- See Designer Properties above.

- Minimum Score % Minimum score to mark an object as detected. By default it is set to 50 for Yolo and 40 for others.

- Object Filter Filter for detected objects. Comma separated object names to be processed: 'person,bicycle,car,truck,cat,dog...' By default, it handles all support objects.

Additional Images#

- Images Count The number of images to be received after an object is detected. For instance, when an object is detected we may want to display the next 2 frames at 2 seconds between them.

- Interval Between (s) Interval between subsequent frames in seconds after an object has been detected to take a snapshot. For instance, if we want to take 2 more snapshots after an object has been detected at 2 seconds between them, we set this value to 2.

General Properties#

See General Properties.

Misc#

See Misc.

Out Error#

See Out Error.

Result#

- Detection Result See Designer Properties above.

Example#

Sample vide for object detection.

In this sample we load a video and apply object detection on it to find the frames with objects. The frames found are saved on the disc. To avoid overloading the CPU, the object detection activity is configured in properties to check the frames every 1 second with 2 subsequent frames every 1 second.

This example can be changed to use a rtsp stream from a cctv camera and get notified by email when a person is detected.

Please make sure you update the File Path for object detection before running this example